How to Delete a Folder or Files from an S3 Bucket

Last updated: Feb 26, 2024

Reading time·5 min

# Table of Contents

# Delete an entire Folder from an S3 Bucket

To delete a folder from an AWS S3 bucket, use the s3 rm command, passing it

the path of the objects to be deleted along with the --recursive parameter

which applies the action to all files under the specified path.

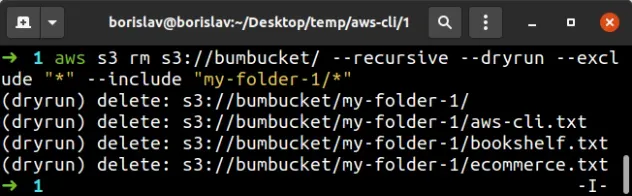

Let's first run the s3 rm command in test mode to make sure the output matches the expectations.

aws s3 rm s3://YOUR_BUCKET/ --recursive --dryrun --exclude "*" --include "my-folder/*"

The output shows that all of the files in the specified folder would get deleted.

The folder also gets deleted because S3 doesn't keep empty folders around.

--exclude and --include parameters matters. Filters passed later in the command have higher precedence.We passed the following parameters to the s3 rm command:

| Name | Description |

|---|---|

| recursive | applies the s3 rm command to all nested objects under the specified path |

| dryrun | shows the command's output without actually running it |

| exclude | we only want to delete the contents of a specific folder, so we exclude all other paths in the bucket |

| include | we include the path that matches all of the files we want to delete |

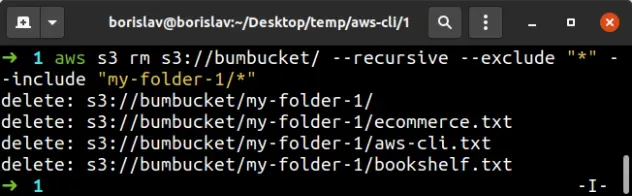

Now that we've made sure the output from the s3 rm command is what we expect,

let's run it without the --dryrun parameter.

aws s3 rm s3://YOUR_BUCKET/ --recursive --exclude "*" --include "my-folder/*"

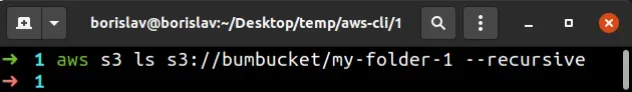

To verify all files in the folder have been successfully deleted, run the s3 ls command.

If the command receives a path that doesn't exist, it has no return value.

aws s3 ls s3://YOUR__BUCKET/YOUR_FOLDER --recursive

s3 rm with the --dryrun parameter first. Make sure that the command does what you intend without actually running it.# Filter which Files to Delete from an S3 Bucket

Here is an example of deleting multiple files from an S3 bucket with AWS CLI.

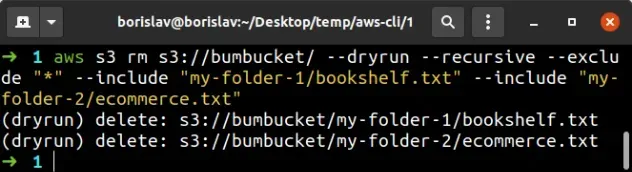

Let's run the command in test mode first. By setting the --dryrun parameter,

we instruct the AWS CLI to only print the outputs of the

s3 rm

command, without actually running it.

aws s3 rm s3://YOUR_BUCKET/YOUR_FOLDER/ --dryrun --recursive --exclude "*" --include "file1.txt" --include "file2.txt"

The output shows the names of the files that would get deleted, had we run the

command without the --dryrun parameter.

--exclude and --include parameters matters. Filters passed later in the command have higher precedence and override those that come before them.This means that passing the --exclude "*" parameter after

--include "file1.txt" would delete all files in the S3 bucket.

s3 rm with the --dryrun parameter first. Make sure that the command does what you intend, without actually running it.In the example above, both of the files are located in the same folder,

otherwise, we would include the path to the files in the --include parameters:

aws s3 rm s3://YOUR_BUCKET/ --dryrun --recursive --exclude "*" --include "folder1/file1.txt" --include "folder2/file2.txt"

s3 rm operation, simply remove the --dryrun parameter.The include parameter can also be set as a matcher.

For example, to delete all files with a .png extension, under a specific

prefix, use the following command.

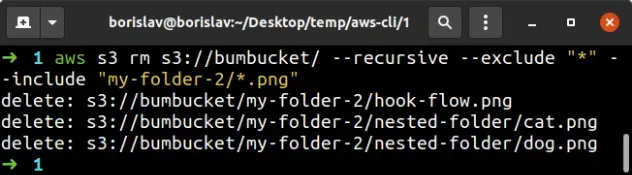

aws s3 rm s3://YOUR_BUCKET/ --recursive --exclude "*" --include "folder2/*.png"

--include parameter matches files with the .png extension in nested directories.The command deleted my-folder/image.png as well as

my-folder/nested-folder/another-image.png.

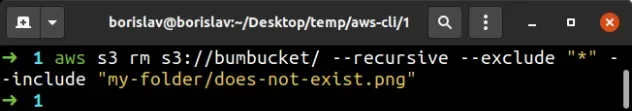

Running the s3 rm command with an --include parameter that does not match

any files produces no output.

The --include and --exclude parameters are used for fine-grained filtering.

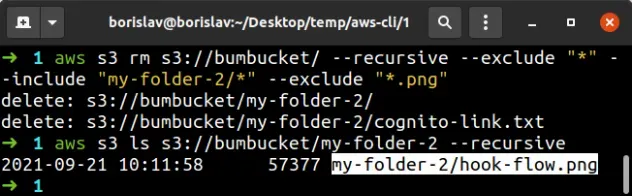

The following command, deletes all objects in the folder, except for objects

with the .png extension:

aws s3 rm s3://YOUR_BUCKET/ --recursive --exclude "*" --include "my-folder/*" --exclude "*.png"

The output of the s3 ls command shows that the image at the path

my-folder-2/hook-flow.png has not been deleted.

If you wanted to preserve all .png and all .txt files, you would just add

another --exclude "*.txt" flag at the end of the command.

--exclude and --include parameters is very important. For instance, if we reverse the order and pass --include "my-folder-2/*" before the exclude "*" parameter, we would delete all of the files in the S3 bucket because exclude comes after include and overrides it.Finally, let's look at an example where we have the following folder structure:

bucket my-folder-3/ image.webp file.json nested-folder/ file.txt file.json

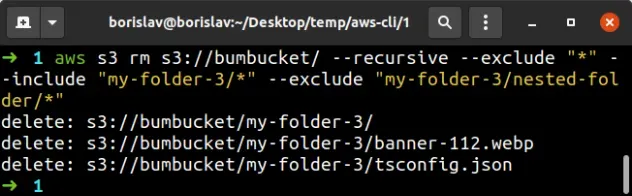

We have a nested folder that we want to preserve, but we want to delete all of

the files in the my-folder-3 directory.

aws s3 rm s3://YOUR_BUCKET/ --recursive --exclude "*" --include "my-folder-3/*" --exclude "my-folder-3/nested-folder/*"

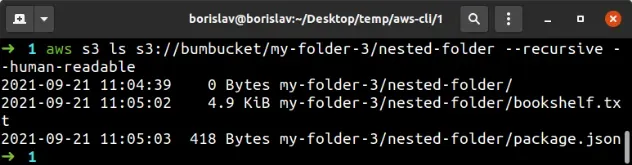

We can run the s3 ls command to verify the nested folder didn't get deleted.

aws s3 ls s3://YOUR_BUCKET/my-folder-3/nested-folder --recursive --human-readable

The output shows that the nested folder was excluded successfully and has not been deleted.

# Additional Resources

You can learn more about the related topics by checking out the following tutorials:

- List all Files in an S3 Bucket with AWS CLI

- Get the Size of a Folder in AWS S3 Bucket

- How to Get the Size of an AWS S3 Bucket

- Configure CORS for an AWS S3 Bucket

- Allow Public Read access to an AWS S3 Bucket

- Copy a Local Folder to an S3 Bucket

- Download a Folder from AWS S3

- How to Rename a Folder in AWS S3

- Count Number of Objects in S3 Bucket

- AWS CDK Tutorial for Beginners - Step-by-Step Guide

- How to use Parameters in AWS CDK

- How to set a Deletion Policy on a Resource in AWS CDK