Copy a Local Folder to an S3 Bucket

Last updated: Feb 26, 2024

Reading time·6 min

# Table of Contents

- Copy a Local Folder to an S3 Bucket

- Copy all files between S3 Buckets with AWS CLI

- Copy Files under a specific Path between S3 Buckets

- Filtering which Files to Copy between S3 Buckets

- Exclude multiple Folders with AWS S3 Sync

# Copy a Local Folder to an S3 Bucket

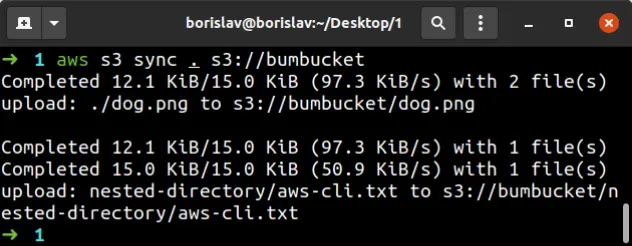

To copy the files from a local folder to an S3 bucket, run the s3 sync

command, passing it the source directory and the destination bucket as inputs.

Let's look at an example that copies the files from the current directory to an S3 bucket.

Open your terminal in the directory that contains the files you want to copy and run the s3 sync command.

aws s3 sync . s3://YOUR_BUCKET

The output shows that the files and folders contained in the local directory were successfully copied to the S3 Bucket.

You can also pass the directory as an absolute path, for example:

# on Linux or macOS aws s3 sync /home/john/Desktop/my-folder s3://YOUR_BUCKET # on Windows aws s3 sync C:\Users\USERNAME\my-folder s3://YOUR_BUCKET

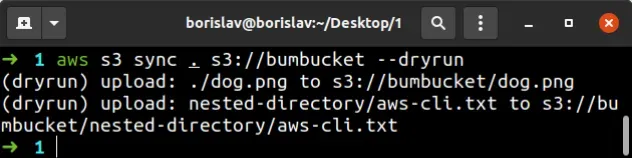

--dryrun parameter. This enables us to show the command's output without actually running it.aws s3 sync . s3://YOUR_BUCKET --dryrun

The s3 sync command copies the objects from the local folder to the

destination bucket, if:

- the size of the objects differs.

- the last modified time of the source is newer than the last modified time of the destination.

- the S3 object doesn't exist under the specified prefix in the destination bucket.

This means that if we had a document.pdf file in both the local directory and

the destination bucket, it would only get copied if:

- the size of the document differs.

- the last modified time of the document in the local directory is newer than the last modified time of the document in the destination bucket.

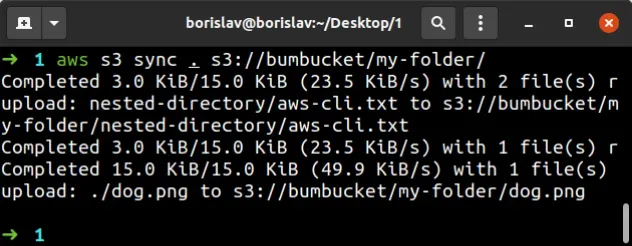

To copy a local folder to a specific folder in an S3 bucket, run the s3 sync

command, passing in the source directory and the full bucket path, including the

directory name.

The following command copies the contents of the current folder to a my-folder

directory in the S3 bucket.

aws s3 sync . s3://YOUR_BUCKET/my-folder/

The output shows that example.txt was copied to

bucket/my-folder/example.txt.

# Table of Contents

- Copy all files between S3 Buckets with AWS CLI

- Copy Files under a specific Path between S3 Buckets

- Filtering which Files to Copy between S3 Buckets

- Exclude multiple Folders with AWS S3 Sync

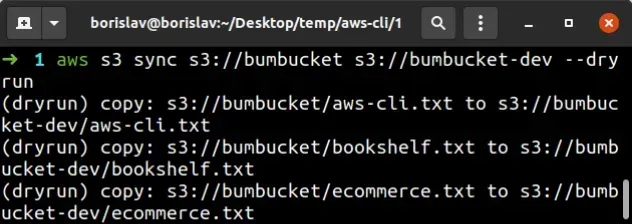

# Copying all files between S3 Buckets with AWS CLI

To copy files between S3 buckets with the AWS CLI, run the s3 sync command,

passing in the names of the source and destination paths of the two buckets. The

command recursively copies files from the source to the destination bucket.

Let's run the command in test mode first. By setting the --dryrun parameter we

can verify the command produces the expected output, without actually running

it.

aws s3 sync s3://SOURCE_BUCKET s3://DESTINATION_BUCKET --dryrun

The output of the command shows that without the --dryrun parameter, it would

have copied the contents of the source bucket to the destination bucket.

--dryrun parameter.The s3 sync command copies the objects from the source to the destination bucket, if:

- the size of the objects differs.

- the last modified time of the source is newer than the last modified time of the destination.

- the S3 object does not exist under the specified prefix in the destination bucket.

This means that if we had an image.png file in both the source and the

destination buckets, it would only get copied if:

- the size of the image differs.

- the last modified time of the image in the source is newer than the last modified time of the destination image.

# Table of Contents

- Copy Files under a specific Path between S3 Buckets

- Filtering which Files to Copy between S3 Buckets

- Exclude multiple Folders with AWS S3 Sync

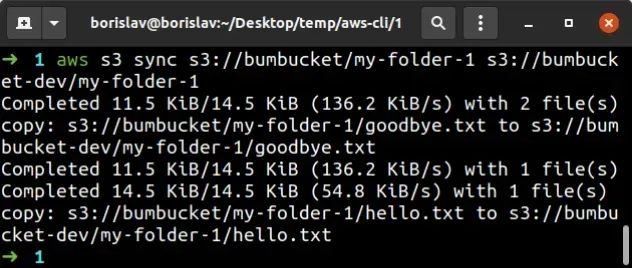

# Copying Files under a specific Path between S3 Buckets

To copy files under a specific prefix, between

S3 buckets, run the s3 sync command,

passing in the complete source and destination bucket paths.

aws s3 sync s3://SOURCE_BUCKET/my-folder s3://DESTINATION_BUCKET/my-folder

This time I ran the command without the --dryrun parameter. The output shows

that the files from source-bucket/folder have been copied to

destination-bucket/folder.

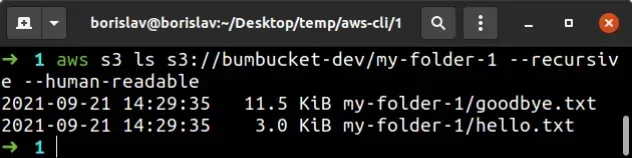

To verify the files got copied successfully, run the s3 ls command.

aws s3 ls s3://DESTINATION_BUCKET/YOUR_FOLDER --recursive --human-readable

# Table of Contents

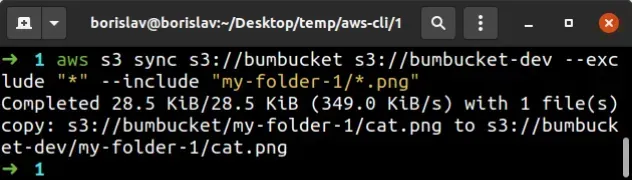

# Filtering which Files to Copy between S3 Buckets

To filter which files should get copied from the source to the destination

bucket, run the s3 sync, passing in the exclude and include parameters.

Let's look at an example where we copy all of the files with .png extension,

under a specific folder, from the source to the destination bucket.

aws s3 sync s3://SOURCE_BUCKET s3://DESTINATION_BUCKET --exclude "*" --include "my-folder-1/*.png"

--exclude and --include parameters matters. Parameters passed later in the command have higher precedence.This means that if we reverse the order and pass the

--include "my-folder-1/*.png" parameter first, followed by --exclude "*", we

would exclude all files from the command and not copy anything.

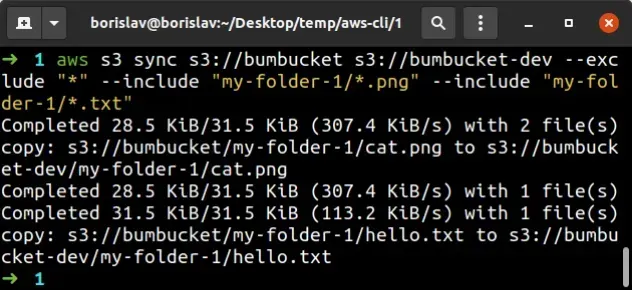

You can repeat the --include and --exclude parameters as many times as

necessary. For example, the following command copies all files with the .txt

and .png extensions, from the source to the destination bucket.

aws s3 sync s3://SOURCE_BUCKET s3://DESTINATION_BUCKET --exclude "*" --include "my-folder-1/*.png" --include "my-folder-1/*.txt"

The output of the command shows that all files with .png and .txt

extensions, under the my-folder-1 directory were successfully copied to the

destination bucket.

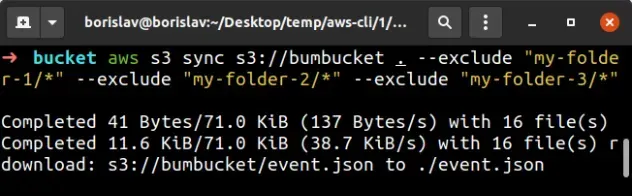

# How to Exclude multiple Folders with AWS S3 Sync

To exclude multiple folders when using S3 sync, pass multiple --exclude

parameters to the command, specifying the path to the folders you want to

exclude.

aws s3 sync s3://YOUR_BUCKET . --exclude "my-folder-1/*" --exclude "my-folder-2/*" --exclude "my-folder-3/*"

In the example above we used the exclude parameter to filter out 3 folders

from the sync command.

Because we passed the root of the bucket after the sync keyword in the command

(e.g. s3://my-bucket ), we have to specify the whole path for the values of

the --exclude parameter.

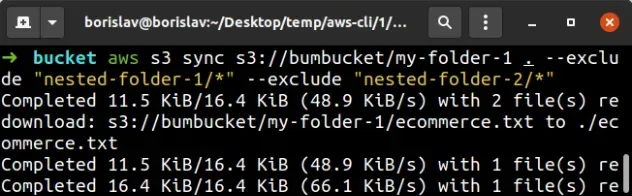

--exclude parameter must be in double quotes when issuing the command on Windows.Let's look at an example where we have the following folder structure in the S3 bucket:

my-folder-1/ file1.txt file2.txt nested-folder-1/ nested-folder-2/

We want to exclude nested-folder-1 and nested-folder-2 from the sync command

and both of them are in the my-folder-1 directory.

Therefore we can add the suffix to the bucket name, instead of repeating it in

the value of all --exclude parameters.

aws s3 sync s3://YOUR_BUCKET/my-folder-1 . --exclude "nested-folder-1/*" --exclude "nested-folder-2/*"

In the example above we specified the my-folder-1 suffix to the bucket name,

which means that all of our --exclude parameters start from that path.

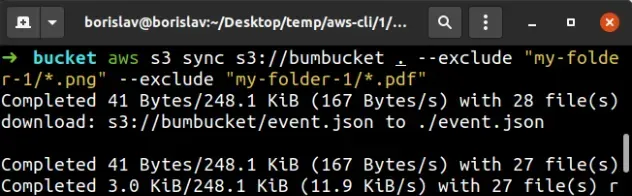

--exclude parameter to filter out specific files, including using wildcards.The following example excludes all files with the .png and .pdf extensions

that are in the my-folder-1 directory.

aws s3 sync s3://YOUR_BUCKET . --exclude "my-folder-1/*.png" --exclude "my-folder-1/*.pdf"

In the example above we excluded all of the .png and .pdf files in the

my-folder-1 directory.

However, files with other extensions in the folder have not been excluded, nor

.png or .pdf files in other directories in the bucket.

# Additional Resources

You can learn more about the related topics by checking out the following tutorials:

- List all Files in an S3 Bucket with AWS CLI

- Get the Size of a Folder in AWS S3 Bucket

- How to Get the Size of an AWS S3 Bucket

- Configure CORS for an AWS S3 Bucket

- Allow Public Read access to an AWS S3 Bucket

- Download a Folder from AWS S3

- How to Rename a Folder in AWS S3

- How to Delete a Folder from an S3 Bucket

- Count Number of Objects in S3 Bucket

- AWS CDK Tutorial for Beginners - Step-by-Step Guide

- How to use Parameters in AWS CDK