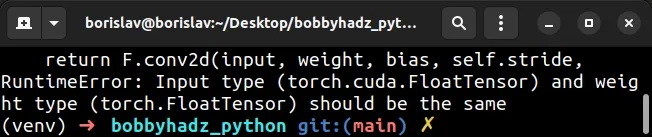

RuntimeError: Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same

Last updated: Apr 12, 2024

Reading time·4 min

# RuntimeError: Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same

The article addresses the following 2 related errors:

- RuntimeError: Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same

- RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu

The solution to solving the two errors is the same.

The PyTorch "RuntimeError: Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same" occurs when your model uses the GPU and your data uses the CPU.

To solve the error, use the torch.cuda.is_available() method to set up and

send your input tensors to the GPU.

import torch # ✅ Check if cuda is available when calling `device()` device = torch.device( 'cuda:0' if torch.cuda.is_available() else 'cpu' ) for data in dataloader: inputs, labels = data inputs = inputs.to(device) labels = labels.to(device) outputs = model(inputs)

The torch.device() method changes the selected device.

# ✅ Check if cuda is available when calling `device()` device = torch.device( 'cuda:0' if torch.cuda.is_available() else 'cpu' )

The torch.cuda.is_available() method returns a boolean that indicates if CUDA is currently available.

If the method returns True, then cuda:0 is returned, otherwise, cpu is

returned.

The torch.Tensor.to() method is used for moving tensors from CPU to GPU and GPU TO CPU.

for data in dataloader: inputs, labels = data inputs = inputs.to(device) labels = labels.to(device) outputs = model(inputs)

The method can also be used for type conversion.

You can also use the ternary operator to achieve the same result.

import torch device = torch.device( 'cuda' ) if torch.cuda.is_available() else torch.device('cpu') for data in dataloader: inputs, labels = data inputs = inputs.to(device) labels = labels.to(device) outputs = model(inputs)

The torch.device() method gets called with the string "cuda" if CUDA is

currently available, otherwise, the method gets called with the string "cpu".

# Using the cuda() method to solve the error

You can also use the cuda() method to send your input tensors to the GPU.

import torch device = torch.device( 'cuda' ) if torch.cuda.is_available() else torch.device('cpu') print(device) for data in dataloader: inputs, labels = data # 👇️ call cuda() inputs = inputs.cuda() # 👇️ call cuda() labels = labels.cuda() outputs = model(inputs)

The torch.cuda package adds support for CUDA tensor types that implement the same function as CPU tensors but utilize GPUs for computation.

You can also use the is_available() method to check if your system supports

CUDA as shown in the code sample.

If you want to hardcode the device, use the following code sample instead.

import torch device = torch.device('cuda')

# Getting the error when your input tensors use the GPU, but your model weights don't

You might also get the error if your input tensors use the GPU, but your model weights don't.

To solve the error in this case, use the is_available() method to check if

your system supports CUDA and call the cuda() method on the model.

import torch model = YourModel() if torch.cuda.is_available(): model = model.cuda()

You can also use the model.to() method to achieve the same result.

import torch model = YourModel() if torch.cuda.is_available(): model.to('cuda') data = data.to('cuda')

If that doesn't work, try to reassign the model variable to the result of

calling model.to("cuda").

model = model.to('cuda')

If you want to hardcode the device, you can also use the following code sample.

import torch device = torch.device('cuda') model = YourModel() model = model.to(device) data = data.to(device)

Alternatively, you can check if the string "cuda" is present in device.

import torch device = torch.device( 'cuda' ) if torch.cuda.is_available() else torch.device('cpu') if 'cuda' in device and not torch.cuda.is_available(): device = 'cpu' data = data.to(device) model.to(device)

If the string "cuda" is present in device and CUDA is not currently

available, then we set the device to "cpu".

# RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu

If you get the "RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu" error, make sure that all operations are done on the same device.

You can do this by calling the .to(device) method on your tensors.

import torch device = torch.device( 'cuda' ) if torch.cuda.is_available() else torch.device('cpu') count0 = torch.zeros(1).to(device) count1 = torch.zeros(1).to(device) count2 = torch.zeros(1).to(device)

Notice that I called .to(device) on the tensors.

This ensures that all operations are done on the same device.

If the device variable is not defined, you will likely have to access it as

y.device or x.device.

device = y.device # or device = x.device

# Make sure to set the device argument when applying transformations

Another common cause of the error is not setting the device argument when

applying transformations.

Here is an example of how to correctly set the device argument.

import torch import torch.nn as nn device = torch.device( 'cuda' ) if torch.cuda.is_available() else torch.device('cpu') # if device is not defined, do x.device or y.device m1 = nn.Linear(20, 100, device=device)

By default, the device argument is set to None, so make sure to explicitly

set it.

If the device variable is not defined, you will likely have to access it as

y.device or x.device.

device = y.device # or device = x.device

# Additional Resources

You can learn more about the related topics by checking out the following tutorials:

- Pandas: How to Query a Column name with Spaces

- Annotate Bars in Barplot with Pandas and Matplotlib

- Pandas: Create a Tuple from two DataFrame Columns

- RuntimeError: Expected scalar type Float but found Double

- Pandas: Convert timezone-aware DateTimeIndex to naive timestamp

- RuntimeError: CUDA out of memory. Tried to allocate X MiB

- OSError: [E050] Can't find model 'en_core_web_sm'

- PyTorch: Trying to backward through the graph a second time