RuntimeError: CUDA out of memory. Tried to allocate X MiB

Last updated: Apr 13, 2024

Reading time·4 min

# RuntimeError: CUDA out of memory. Tried to allocate X MiB

The PyTorch "RuntimeError: CUDA out of memory. Tried to allocate X MiB" occurs when you run out of memory on your GPU.

You can solve the error in multiple ways:

- Reduce the batch size of the data that is passed to your model.

- Run the

torch.cuda.empty_cache()method to release all unoccupied cached memory. - Delete all unused variables using the garbage collector.

- Use the

torch.cuda.memory_summary()method to get a human-readable summary of the memory allocator statistics.

Here is the complete error message.

RuntimeError: CUDA out of memory. Tried to allocate X MiB (GPU X; X GiB total capacity; X GiB already allocated; X MiB free; X cached)

# Reduce the batch size and train your models on smaller images

The first thing you should try is to reduce the batch size and train your models on smaller images.

You can try to set the batch_size argument to 1.

loader = DataLoader( dataset, batch_size=1, collate_fn=collate_wrapper, pin_memory=True )

You can gradually increase the batch_size and see what value your GPU can

handle.

Training your models on smaller images will consume less memory.

For example, if your image has a shape of 256x256, try reducing its size to 128x128.

Resizing the image will often resolve the error.

You can also try to load the data by unpacking it iteratively to reduce memory consumption.

features, labels in batch: features = features.to(device) labels = labels.to(device)

This is much better than sending all your data to CUDA at once.

# Try to release the unoccupied cached memory

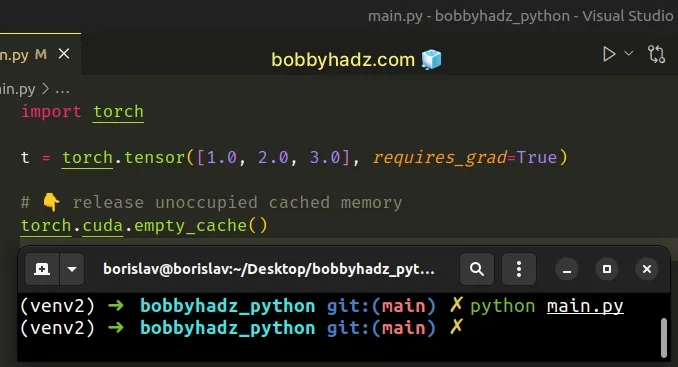

You can also try releasing the unoccupied cached memory by running torch.cuda.empty_cache().

import torch t = torch.tensor([1.0, 2.0, 3.0], requires_grad=True) # 👇️ Release unoccupied cached memory torch.cuda.empty_cache()

The torch.cuda.empty_cache() method releases all unoccupied cached memory that

is currently held by the caching allocator.

The released memory can be used by GPU processes.

The torch.cuda.empty_cache() method should be used before the code block that

causes the error.

# Try to garbage collect the unused variables

You can also try to garbage-collect the unused variables in your application.

import gc import torch t = torch.tensor([1.0, 2.0, 3.0], requires_grad=True) del t gc.collect()

The del statement can be used to delete a variable and free up memory.

The gc.collect() method runs the garbage collector.

When no arguments are passed to the method, it runs a full garbage collection.

You will often run into memory consumption issues in loops because when you assign a variable in a loop, memory is not freed up until the loop is complete.

You can get around this by deleting variables in a loop, once you're done working with them.

for i in range(5): intermediate = f(input[i]) result += g(intermediate) # 👇️ Delete variable here del intermediate output = h(result) return output

Now the variable is deleted and memory is freed up on each iteration.

# Getting a human-readable printout of the memory allocator statistics

You can also use the torch.cuda.memory_summary() method to get a human-readable printout of the memory allocator statistics for a given device.

import torch t = torch.tensor([1.0, 2.0, 3.0], requires_grad=True) print( torch.cuda.memory_summary( device=None, abbreviated=False ) )

Calling the method is useful when handling out-of-memory exceptions.

# Try to restart your code editor

If the error persists, try to restart your code editor.

You can also try to close the window completely and reopen it to make sure all stale data has been garbage collected.

You might often run into this issue when running your code in VS Code.

Another thing you can try is to:

- Disable the GPU.

- Restart the Jupyter Kernel.

- Reactivate the GPU.

# Provisioning an instance with more GPU memory

If you get the error when processing data in the cloud (e.g. on AWS), try to provision an instance with more GPU memory.

The model that you're loading into your GPU memory is too large for your current instance.

# Finding the process that consumes GPU memory

You can also find the nvidia-smi process by name.

pgrep nvidia-smi

Now, use the process ID to stop the nvidia-smi process.

sudo kill -9 YOUR_PROCESS_ID

Make sure to replace the YOUR_PROCESS_ID placeholder with the output you got

from running the previous command.

Try to rerun your script after ending the nvidia-smi process.

# Working with computations that require gradients

You might also run into GPU memory consumption issues if you drag your gradients too far.

total_loss = 0 for i in range(5000): optimizer.zero_grad() output = model(input) loss = criterion(output) loss.backward() optimizer.step() total_loss += loss

The += operator in the total_loss += loss line accumulates history across

your training loop.

You can resolve the issue by replacing the line with

total_loss += float(loss).

# Other tips for reducing GPU memory usage

You should also minimize the number of tensors you create since tensors are very rarely garbage collected.

If you use an RNN decoder, avoid using large loops.

# Additional Resources

You can learn more about the related topics by checking out the following tutorials: